I decided to write this post after reading a question on StackOverflow, where the OP was asking for recommendations to simplify his IoC setup code – to make it more readable and also to prevent runtime errors when resolving instances, whenever a change is made.

I feel his pain. Anyone that used and configured an IoC container such as Unity knows how easy it is to break things when you add or change an existing configuration. So, how to detect if things are broken until it’s too late (runtime errors)? Writing tests, obviously.

In this post I’ll show you a quick and simple way to test your dependencies using Unity IoC container.

The scenario – a shopping cart service

Let’s assume a simple scenario – a service that allows users to check the all the items and corresponding prices of his shopping cart. The service uses a logger, a repository to read the information of the cart and a currency service that is used to display the prices in the currency selected by the user:

Source code is the following (methods omitted for brevity):

public interface ILogger

{

}

public class AsyncLogger : ILogger

{

}

public class ConsoleLogger : ILogger

{

}

public class FileLogger : ILogger

{

}

public interface ICurrencyApiClient

{

}

public class CurrencyApiClient : ICurrencyApiClient

{

private readonly string _apiKey;

private readonly ILogger _logger;

public CurrencyApiClient(string apiKey, ILogger logger)

{

_apiKey = apiKey ?? throw new ArgumentNullException(nameof(apiKey));

_logger = logger ?? throw new ArgumentNullException(nameof(logger));

}

}

public interface IRepository

{

}

public class Repository : IRepository

{

private readonly string _connectionString;

public Repository(string connectionString)

{

_connectionString = connectionString ?? throw new ArgumentNullException(nameof(connectionString));

}

}

public class ShoppingCartService : IShoppingCartService

{

private readonly IRepository _repository;

private readonly ICurrencyApiClient _currencyApiClient;

private readonly ILogger _logger;

public ShoppingCartService(IRepository repository, ICurrencyApiClient currencyApiClient, ILogger logger)

{

_repository = repository ?? throw new ArgumentNullException(nameof(repository));

_currencyApiClient = currencyApiClient ?? throw new ArgumentNullException(nameof(currencyApiClient));

_logger = logger ?? throw new ArgumentNullException(nameof(logger));

}

}

Configuring the dependencies

The first thing to do is to put the IoC code in a class library that can be easily referenced by a test project. You can then add your Bootstrapper class, something like this:

public class Bootstrapper

{

public IUnityContainer Init()

{

var container = new UnityContainer();

// dependencies registration goes here....

return container;

}

}

Please note that the Init() method returns the IoC container – you’ll need it in the unit tests when trying to resolve the instances.

Full source code of the Bootstrapper is the following:

public class Bootstrapper

{

private readonly NameValueCollection _appSettings;

private readonly ConnectionStringSettingsCollection _connectionStrings;

public Bootstrapper(

NameValueCollection appSettings = null,

ConnectionStringSettingsCollection connectionStrings = null

)

{

_appSettings = appSettings ?? ConfigurationManager.AppSettings;

_connectionStrings = connectionStrings ?? ConfigurationManager.ConnectionStrings;

}

public IUnityContainer Init()

{

var container = new UnityContainer();

// default logger is AsyncLogger

container.RegisterType();

// named instances for loggers

container.RegisterType(nameof(AsyncLogger));

container.RegisterType(nameof(ConsoleLogger));

container.RegisterType(nameof(FileLogger));

container.RegisterType(new InjectionFactory(CreateCurrencyApiClient));

container.RegisterType(new InjectionFactory(CreateRepository));

container.RegisterType();

return container;

}

}

Some notes:

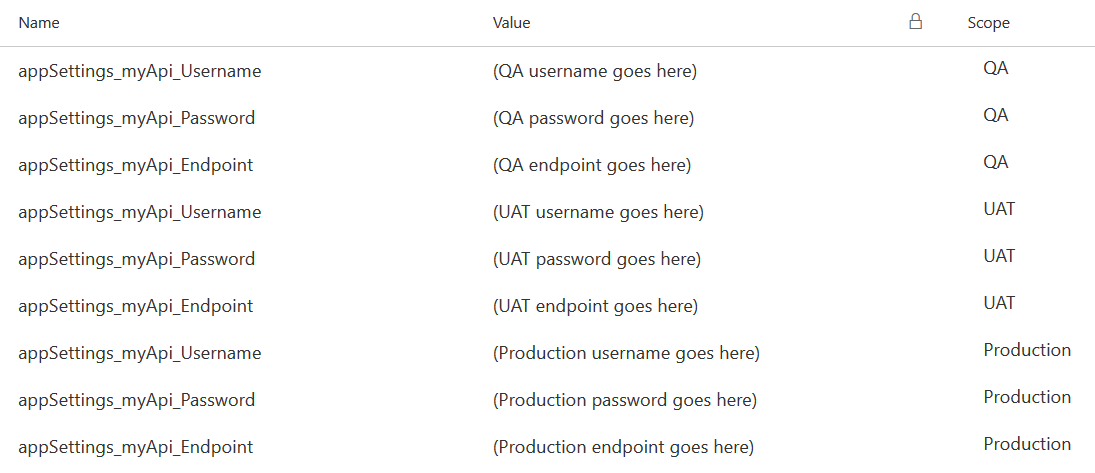

- The constructor takes 2 optional parameters (appSettings and connectionStrings), which can be used for testing purposes. If no values are provided it will use the values from the configuration file.

- AsyncLogger, ConsoleLogger and FileLogger are registered as named instances

- ICurrencyApiClient and IRepository are registered using a factory method

Configuring the unit tests

Now it’s time to write the unit tests. Instead of manually adding tests for every single dependency, we can use the Registrations property of IUnityContainer to get the metadata of all registered dependencies:

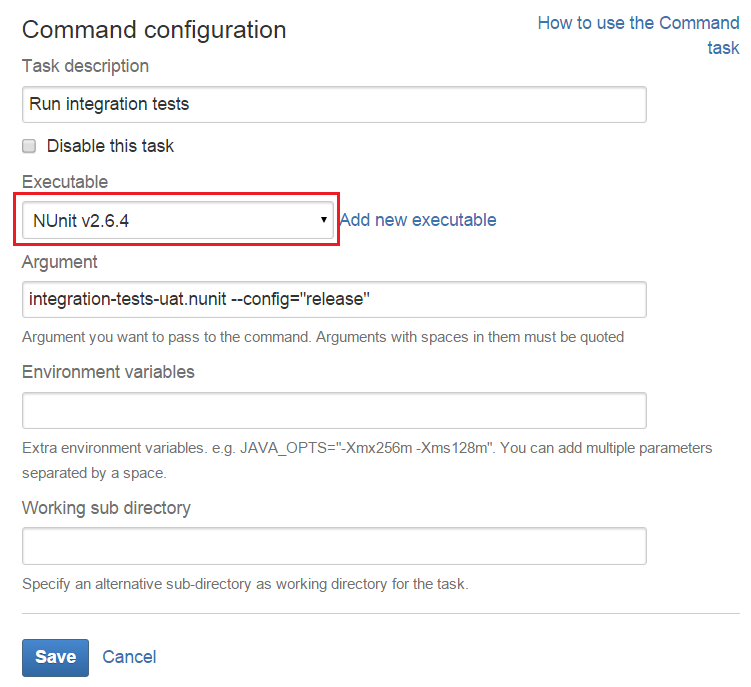

Writing the tests (using NUnit and Fluent Assertions):

[TestFixture]

public class BootstrapperTests

{

private static IUnityContainer Container => new Bootstrapper().Init();

private static IEnumerable UnityRegistrations

{

get

{

var registrations = Container.Registrations

.Where(x => x.RegisteredType != typeof(IUnityContainer))

.Select(x => new TestCaseData(x.RegisteredType, x.Name));

return registrations;

}

}

[Test]

[TestCaseSource(nameof(UnityRegistrations))]

public void GivenATypeAndName_WhenResolvingInstance_InstanceShouldNotBeNull(Type registeredType, string instanceName)

{

// arrange/act

object instance = Container.Resolve(registeredType, instanceName);

// assert

using (new AssertionScope())

{

instance.Should().BeAssignableTo(registeredType);

instance.Should().NotBeNull();

}

}

}

It’s just as simple as that. Given the registered type and the instance name of the dependencies, I can try to resolve them. If the instance is null or an exception is thrown the test will fail, which means that there is something wrong with our Bootstrapper. Also, I check if the returned instance has the expected type.

Running the code using Reshaper:

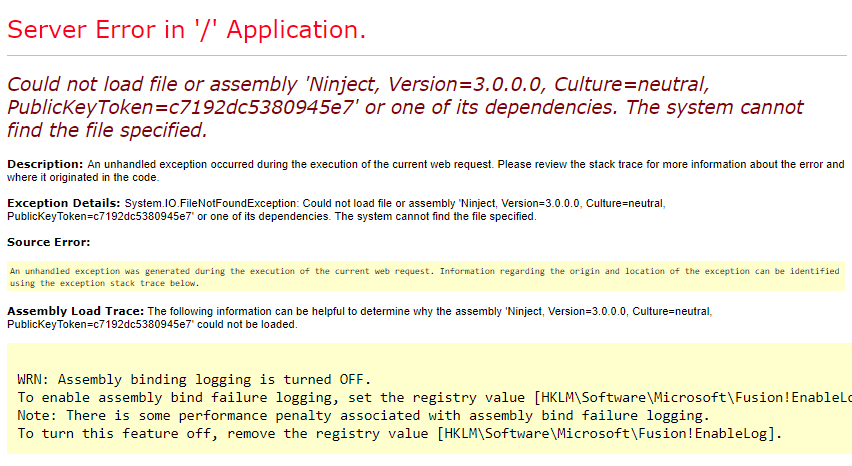

Some tests failed because I forgot to add a configuration file with the settings used by both the CurrencyApiClient and Repository. Test for IShoppingCartService fails as well because it uses both dependencies.

Fixing the code and running the tests:

All good now. As you can see, there is a test for every single type/instance name.

Final thoughts

You should add as many unit tests as possible to your code – IoC setup is no exception. Also, these tests do not exclude the usage of other type of tests such as integration or smoke tests.

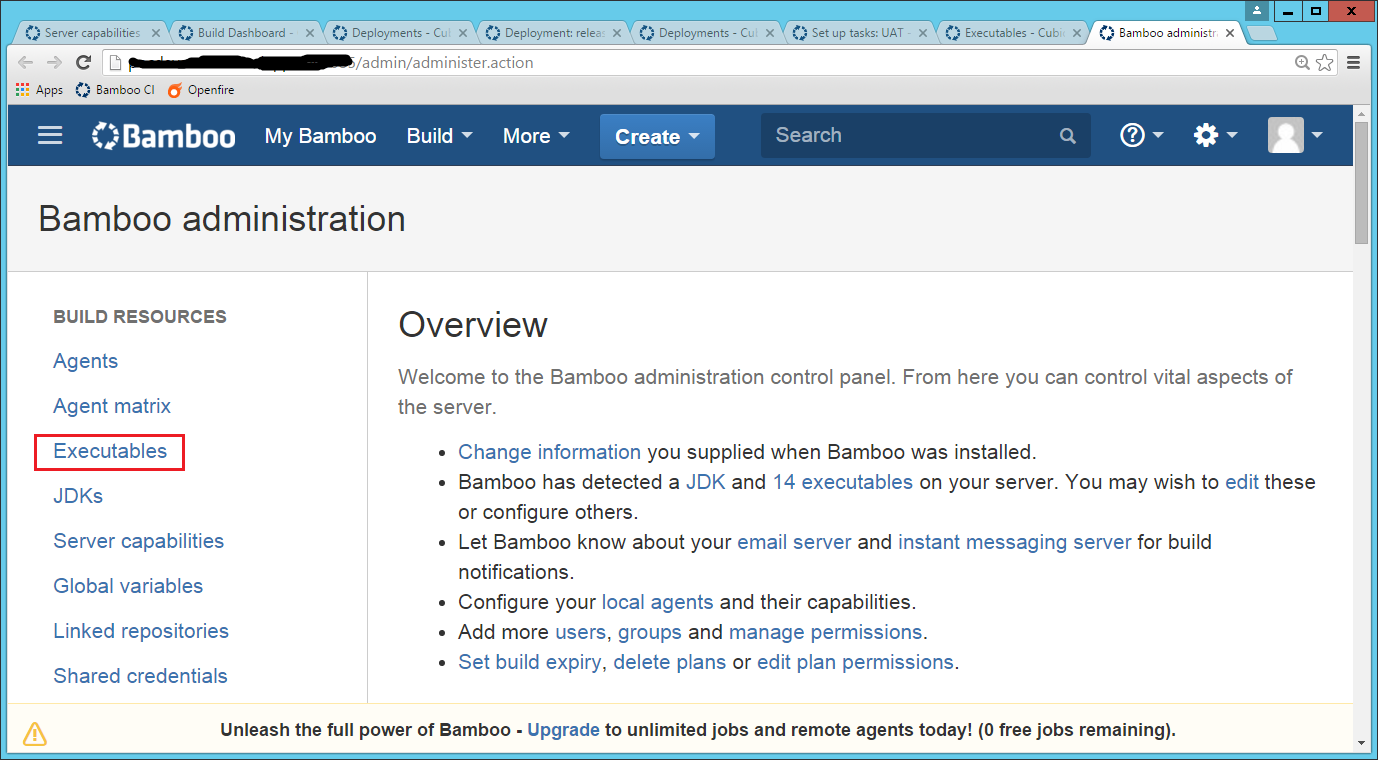

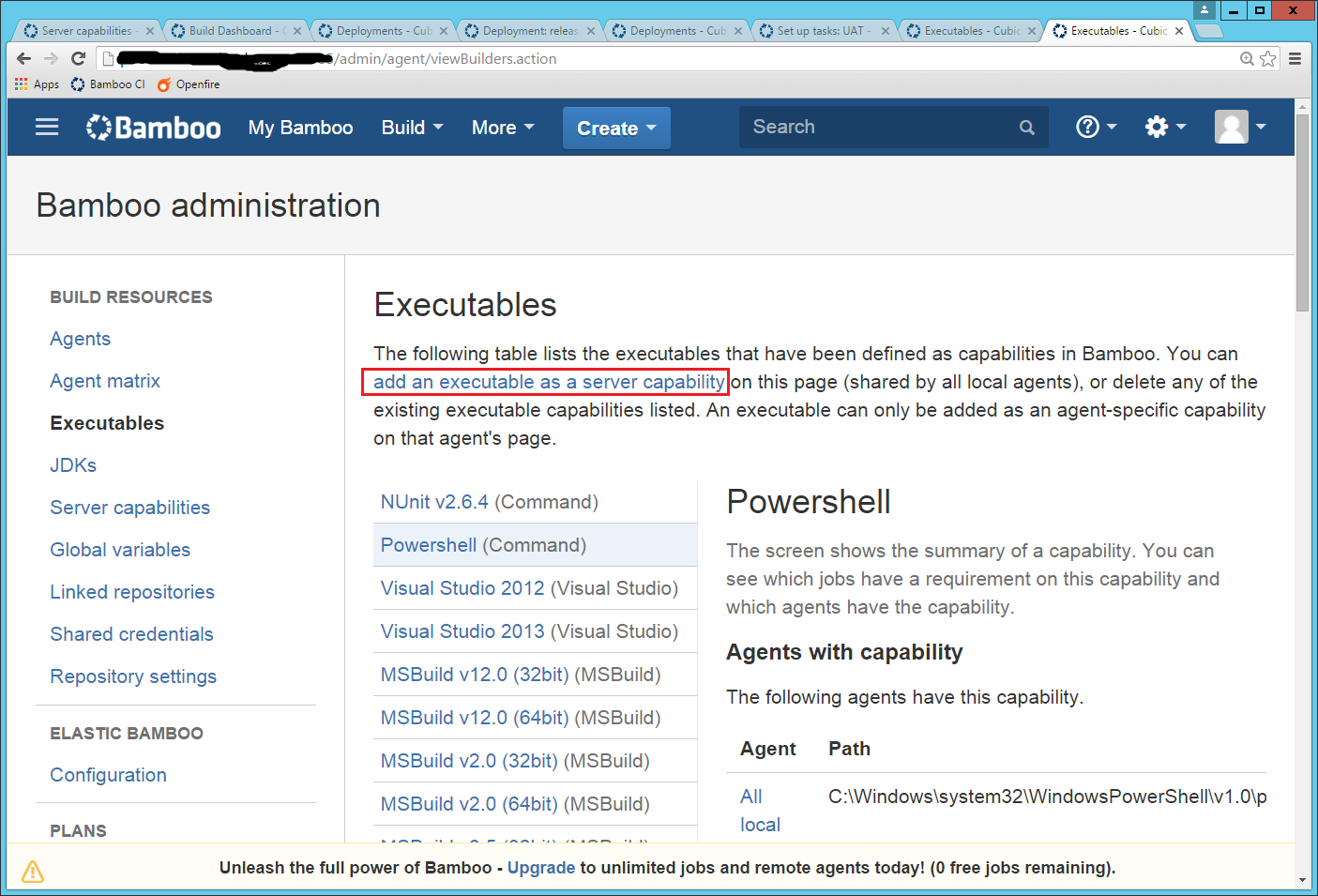

My article is a good starting point, but this might not be enough. For example, if you have ASP.NET MVC or ASP.NET Web API applications, you should test your DependencyResolver in order to ensure that all controllers are being instantiated correctly (i.e. without throwing exceptions).

Consider also running these tests for every single environment – each environment has its own configuration, so better be safe than sorry 😉

Happy coding!