Today I’ll write about an anti-pattern that I see quite often, regarding the usage of configuration settings. Settings stored in configuration files such as web.config or app.config are a dependency that should be abstracted in order to make your code more flexible and testable!

In this article I’ll show you:

- Some of the problems of using the configuration settings

- How to refactor your code in order to make it more testable

- How to refactor your tests in order to make them CI/CD friendly

The problem – testing code that uses configuration settings

Let’s suppose you are creating a service class that uses a 3rd party REST API. API’s username, password and endpoint are stored in the configuration file as follows:

<appSettings>

<add key="myApi.Username" value="myusername" />

<add key="myApi.Password" value="mypassword" />

<add key="myApi.Endpoint" value="https://www.myapi.com/v1" />

</appSettings>

Consider the following code:

public class FooService

{

private readonly string _myApiUsername;

private readonly string _myApiPassword;

private readonly string _myApiEndpoint;

public FooService()

{

_myApiUsername = GetConfigValue("myApi.Username");

_myApiPassword = GetConfigValue("myApi.Password");

_myApiEndpoint = GetConfigValue("myApi.Endpoint");

}

private string GetConfigValue(string key)

{

string value = ConfigurationManager.AppSettings[key];

if (string.IsNullOrWhiteSpace(value))

{

string message = $"Could not find AppSettings[\"{key}\"]!";

throw new InvalidOperationException(message);

}

return value;

}

// code omitted for brevity

}

The first problem comes when you try to write some unit tests for this class. Using this approach will force you to have a configuration file in your unit test project, which is not a big deal.

What if you needed to use different values to test other scenarios, such as testing if an InvalidOperationException is thrown when the username, password or endpoint are null or whitespace? You’d have to find a way to override the AppSettings section of the configuration file (ugly stuff, trust me). I’ll show you next how to refactor the code to make it more testable.

Making the code unit-test friendly

Please note that this is not the best solution but just the first step to make your code more testable. This is ideal for people that, for some reason, cannot spend much time refactoring the code.

The trick is to change the constructor to take an optional NameValueCollection parameter. If this parameter is not set then it will try to get the values from the configuration file (using the ConfigurationManager.AppSettings object):

public class FooService

{

private readonly NameValueCollection _appSettings;

private readonly string _myApiUsername;

private readonly string _myApiPassword;

private readonly string _myApiEndpoint;

public FooService(NameValueCollection appSettings = null)

{

_appSettings = appSettings ?? ConfigurationManager.AppSettings;

_myApiUsername = GetConfigValue("myApi.Username");

_myApiPassword = GetConfigValue("myApi.Password");

_myApiEndpoint = GetConfigValue("myApi.Endpoint");

}

private string GetConfigValue(string key)

{

string value = _appSettings[key];

if (string.IsNullOrWhiteSpace(value))

{

string message = $"Could not find AppSettings[\"{key}\"]!";

throw new InvalidOperationException(message);

}

return value;

}

// service methods go here

}

Setting the values in a unit test is easy:

// arrange

var settings = new NameValueCollection {

{"myApi.Username", "myusername"},

{"myApi.Password", "mypassword"},

{"myApi.Endpoint", "myendpoint"}

};

var service = new FooService(settings);

// act

// ....

// assert

// ....

Another example – testing if an exception is thrown when the username is empty:

// arrange

var settings = new NameValueCollection {

{"myApi.Username", ""},

{"myApi.Password", "mypassword"},

{"myApi.Endpoint", "myendpoint"}

};

FooService service = null;

// act/ assert

Assert.Throws<InvalidOperationException>(() => {

service = new FooService(settings);

});

Code is now testable, cool! I am now able to use different settings and run tests on my machine (“it works on my machine”, hurray!). But this is not good enough!

Making the code testable and CI/CD friendly

The previous refactoring is a very quick way to make code testable, but it can be improved in terms of testability and readability. The first thing I don’t like is the usage of a NameValueCollection object that contains the settings, I’d rather define an interface and a class like these ones:

public interface IApiSettings

{

string MyApiEndpoint { get; }

string MyApiPassword { get; }

string MyApiUsername { get; }

}

public class ApiSettings : IApiSettings

{

public string MyApiUsername { get; }

public string MyApiPassword { get; }

public string MyApiEndpoint { get; }

public ApiSettings(string myApiUsername, string myApiPassword, string myApiEndpoint)

{

if (string.IsNullOrWhiteSpace(myApiUsername))

{

throw new ArgumentException("Username cannot be null or whitespace.", nameof(myApiUsername));

}

if (string.IsNullOrWhiteSpace(myApiPassword))

{

throw new ArgumentException("Password cannot be null or whitespace.", nameof(myApiPassword));

}

if (string.IsNullOrWhiteSpace(myApiEndpoint))

{

throw new ArgumentException("Endpoint cannot be null or whitespace.", nameof(myApiEndpoint));

}

MyApiUsername = myApiUsername;

MyApiPassword = myApiPassword;

MyApiEndpoint = myApiEndpoint;

}

}

Refactoring FooService, one more time:

public class FooService

{

private readonly IApiSettings _settings;

public FooService(IApiSettings settings)

{

_settings = settings ?? throw new ArgumentNullException(nameof(settings));

}

// service methods go here

}

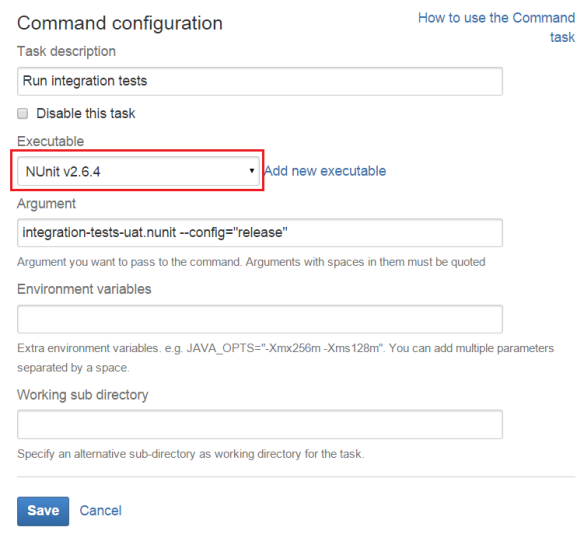

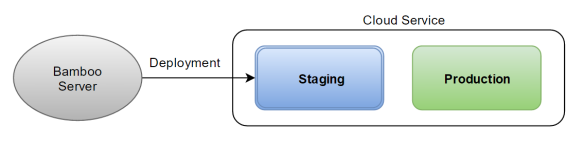

Now let’s talk again about the tests – things are a bit different when we’re running tests in a build or deployment pipeline, comparing to our local machine.

Different environments will have different configuration settings (connection strings, API endpoints, etc) so it’s extremely important to know what is the current environment and load the corresponding settings.

Also, where do we store these settings? We have at least these options:

- Create one configuration file per environment

- Configure environment variables per environment

It’s probably easier to use configuration files locally, what about the build/deployment pipeline? Using the configuration files might be an option for some environments but not for others such as Production (for security reasons). These settings can be set (manually or dynamically) using environment variables in the build/deployment pipeline.

I have used the following approach that works for both scenarios:

- if the environment variable exists then use it

- otherwise, use the value from the configuration file

Just to be completely clear, the environment variable takes precedence over the setting from the configuration file. I have created an helper class that will be used to get the right values to be used in the tests, according to the approach above:

public static class ConfigurationHelper

{

public static string GetEnvironmentOrConfigValue(string key, string defaultValue = null)

{

if (string.IsNullOrWhiteSpace(key))

{

throw new ArgumentException("Value cannot be null or whitespace.", nameof(key));

}

string value = GetEnvironmentValue(key);

if (!string.IsNullOrWhiteSpace(value))

{

return value;

}

value = GetConfigValue(key);

if (!string.IsNullOrWhiteSpace(value))

{

return value;

}

return defaultValue;

}

private static string GetConfigValue(string key)

{

string value = ConfigurationManager.AppSettings[key];

return value;

}

private static string GetEnvironmentValue(string key)

{

string variableName = string.Concat("appSettings_", key.Replace(".", "_"));

string value = Environment.GetEnvironmentVariable(variableName);

return value;

}

Refactoring the tests:

// arrange

var username = ConfigurationHelper.GetEnvironmentOrConfigValue("myApi.Username");

var password = ConfigurationHelper.GetEnvironmentOrConfigValue("myApi.Password");

var endpoint = ConfigurationHelper.GetEnvironmentOrConfigValue("myApi.Endpoint");

var settings = new ApiSettings(username, password, endpoint);

var service = new FooService(settings);

// act

// ....

// assert

// ....

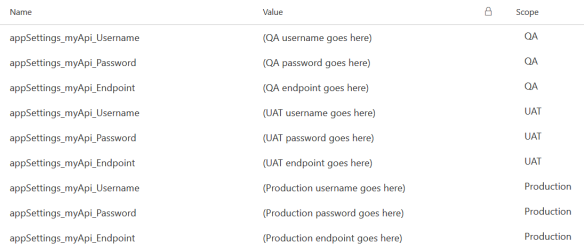

A quick note – you can have the same names for both the appSettings and the environment variables or follow a naming convention. In my example above, configuration setting “myApi.Username” would correspond to the environment variable “appSettings_myApi_Username“. You can use any convention you want.

So, for the following configuration settings

<appSettings>

<add key="myApi.Username" value="myusername" />

<add key="myApi.Password" value="mypassword" />

<add key="myApi.Endpoint" value="https://www.myapi.com/v1" />

</appSettings>

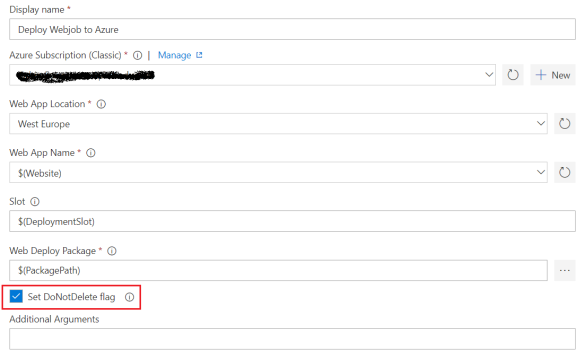

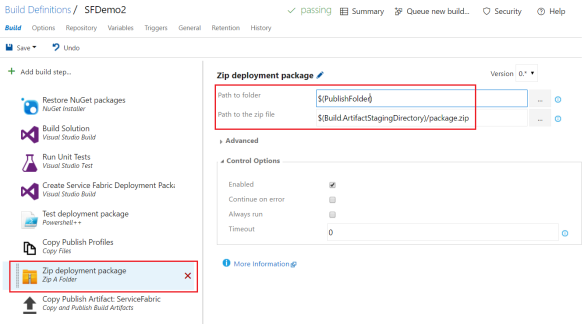

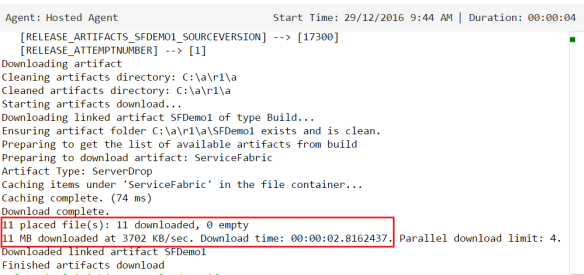

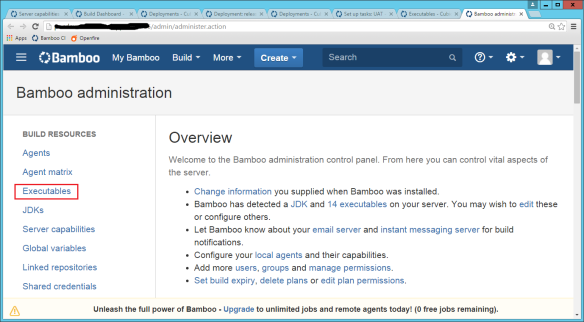

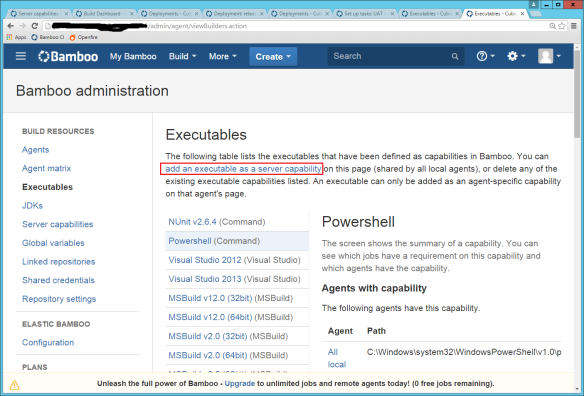

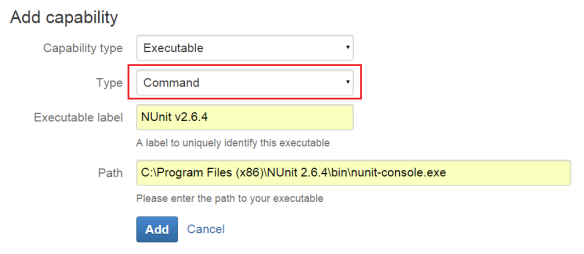

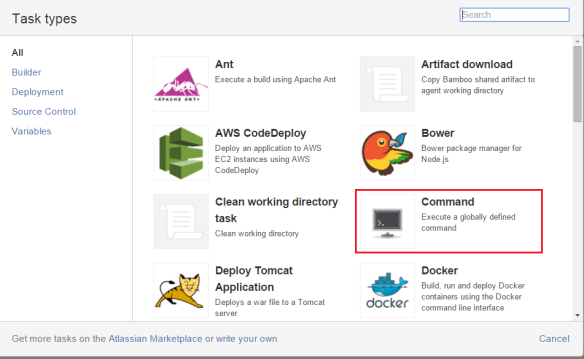

I’d need to configure the corresponding environment variables in the build server, for each environment. Something like this:

(Please note that this screenshot was taken from a demo release definition I created in Visual Studio Team Services (VSTS) that contains 3 environments: QA, UAT, Production).

That’s it! When the tests are run in the build server they will use the environment variables values defined above. Another good thing of this approach is that you can use both values from the configuration file and the environment variables in the build server.

Happy coding!